Layers All The Way Down: The Untold Story of Shader Compilation

2024-07-01

Background

As a game developer who works primarily in frameworks instead of engines, one of the biggest pain points is the need to render on multiple platforms efficiently. For most platform-level tasks, like window management, input handling, etc, SDL does a beautiful job and I barely have to think about it.

Rendering, by comparison, is a huge can of worms. Every platform has their own unique support matrix. For Windows you have D3D12/D3D11/Vulkan/OpenGL. For Apple platforms you have Metal and OpenGL, or OpenGL ES if you’re on iOS/tvOS. For Linux and Nintendo you have Vulkan and OpenGL. For PlayStation you have whatever the hell they have going on over there. For Xbox you have D3D12 only. Android has Vulkan and OpenGL ES. You get the picture.

All of these hardware acceleration APIs have similarities, but they have enough differences that mapping all the functionality you need onto them is nontrivial.

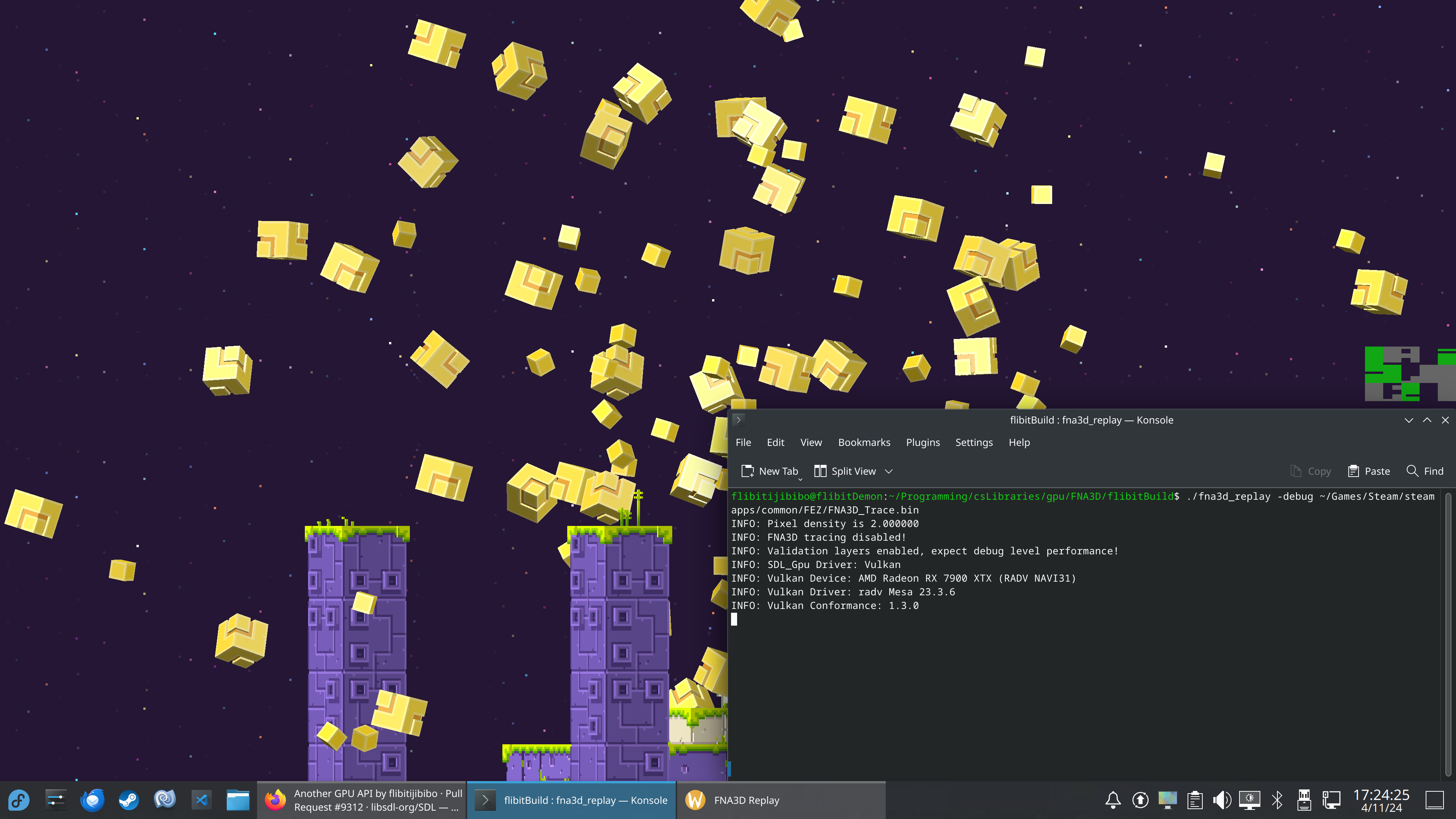

I am a co-maintainer of the FNA project, which is a project that preserves the XNA framework on contemporary platforms. As part of this work for a few years I have worked on our cross-platform graphics abstraction FNA3D, particularly on the Vulkan implementation. This library allows us to translate XNA graphics calls to modern systems.

My work on that project led me to create Refresh, which has a similar architecture but is influenced by the structure of Vulkan and modernized in several key regards. For the past several months I have been working on submitting a version of that API as a proposal to SDL. Ryan C. Gordon (aka icculus) announced plans to include a GPU API in SDL a few years ago. Refresh only differed from his proposal in a few small details, and the implementation was mostly complete, so the FNA team submitted what I had written in the hopes of saving some time and developer effort.

For the most part this has been fairly well-received, but there is a question we have been asked repeatedly. Presently we support Vulkan, Metal, and D3D11, with other backends coming soon. In our API, to create a shader object you must submit either SPIR-V which we transpile at runtime, a high-level language supported by a specific backend, or bytecode supported by a specific backend. Why did we decide to structure things this way? To answer this question I will try to explain some of the technical and political challenges in today’s graphics landscape.

What is a shader?

In Ye Olde Days, graphics APIs consisted of function entry points that would map to dedicated hardware logic. This was known as a fixed-function API. OpenGL was a fixed-function API until version 2, and Direct3D until version 8. You had the functions these APIs provided for modulating data, and that was it.

With the advent of GL2 and D3D8, the concept of a programmable shader stage was introduced. This allowed a much larger range of rendering flexibility.

A shader is effectively a massively-parallel program that is executed on the GPU. The task of a shader is to transform large amounts of data in parallel. A vertex shader takes in vertex data and transforms each vertex in parallel. A fragment shader takes transformed information from the vertex shader and the hardware rasterization process to output a color value to each rasterized pixel in parallel. A compute shader transforms more-or-less arbitrary data in parallel.

In Ye Slightly Less Olde Days, these APIs would take in high-level shader programs. You would write shader code in text format, pass that to the API, and it would attempt to compile the shader at runtime. This approach had some significant drawbacks: this meant that driver authors had to ship entire compilers in their drivers, and parsing and compiling requires a nontrivial amount of computation time, which is awkward especially when you’re trying to hit 16ms per frame or minimize up-front load times. This also meant that if the driver had a compiler bug, you would only find out at runtime on a specific install. Yikes! In contemporary APIs, you instead pass bytecode (or intermediate representation) to the driver, which cuts out a lot of the complexity. However, these bytecode programs still have to be compiled into a natively-executable format.

Let’s back up a little. Programming languages are very abstracted these days, so it’s easy to forget that the programs have to be transformed into actual machine code to be executed. Consider the two common CPU instruction sets, x86 and ARM. These have become so standard that most of us take it for granted that basically every CPU is going to use one or the other. When you compile a program, you compile it to one or the other, and you have support on the vast majority of actual hardware.

GPUs are not an exception to the fact that programs have to run on actual hardware. Where x86 and ARM have won the instruction set wars in CPU-land, the situation with GPUs is not even remotely this standardized. Every single manufacturer has a unique GPU architecture and instruction set architecture (ISA), and they typically have multiple generations of their architecture in support at the same time. Nvidia has Lovelace, Ampere, Turing, etc. AMD has RDNA3, RDNA2, and so on. In case you thought these were simple, AMD publishes its ISA specification, and last I checked the RDNA2 document was 290 pages long.

When you submit bytecode to the driver, it has to compile that bytecode specifically for the graphics hardware on your machine. The compiled shader is only executable on that specific device and driver version. But it gets even worse. There is a competing form of bytecode for every graphics API. Vulkan has SPIR-V, D3D has DXIL/DXBC, Metal has AIR. SPIR-V, to the credit of the Khronos Group, at least attempts to be a standard portable intermediate representation (it’s almost like that’s the name of the format or something) in spite of literal corporate sabotage against its adoption. Thanks to SPIRV-Cross, we can translate compiled SPIR-V bytecode to high level formats like HLSL and MSL, which allows for some measure of portability.

Which brings me to the following potentially controversial statement:

Shaders are content, not code

I know, I know. You literally create shaders by writing shader code.

If only it were that simple. Let’s describe the process of writing a vertex shader in HLSL and loading it.

On D3D11:

- You write your shader using HLSL.

- At some point, either at runtime or at build time, you call D3DCompile to emit DXBC (DirectX Bytecode).

- At runtime, you call ID3D11Device_CreateVertexShader using your bytecode to obtain a shader object.

On Vulkan:

- You write your shader using HLSL (with SPIR-V binding annotations)

- At build time you use a tool like glslang to emit SPIR-V bytecode.

- At runtime, you call vkCreateShaderModule to obtain a shader object.

On Metal:

- You write your shader using HLSL.

- At build time you emit SPIR-V bytecode.

- You use SPIRV-Cross to translate SPIR-V to MSL.

- At runtime, you call Metal’s newLibraryWithSource to obtain a shader object.

Of course, a shader object by itself doesn’t do anything - it needs to be part of a pipeline object. The pipeline needs to be made aware of the vertex input structure and the data resources (textures, samplers, buffers) which are used by the shader. There is no universal method for extracting this information from shader code. You must either provide it by hand, or use language-specific tools to reflect on the code (which is expensive to do at runtime, and sometimes not available when shipping on particular devices). Furthermore, creating pipeline objects contains backend-specific quirks. For example, in most APIs compute shader workgroup size is provided in the shader bytecode. On Metal, the client is expected to provide this information at dispatch time. Devising a singular interface that can accomodate all these discrepancies has been a significant challenge.

Shaders are highly inflexible programs that require a lot of state to be configured correctly in order to function. An individual shader program is designed to fit a specific task. In my experience shaders are not something I iterate on frequently. I write a few shaders for a few specific rendering tasks, set up my pipelines, and then I don’t touch them unless some rendering requirements change. (There is an exception to this in some artist-driven shader workflows on modern game engines. I have some thoughts about how great this has been for customers, but the short version is that this is why you have to wait 20 minutes for shaders to compile on Unreal Engine games the first time you run them, or every time you update your graphics drivers.)

As an analogy, consider the process of efficiently rendering a game that uses 2D sprites. You could load each individual sprite as an individual texture, but now you have to change textures for each draw call, which is incredibly inefficient. The correct way to do this is to pack the sprites into a spritesheet at build time so they are all on the same texture, and then you can batch multiple sprites into a single draw call. In general, content that is convenient to produce is not content that is efficient for the computer to utilize. There is a step required to transform that content into something efficient.

To restate the shader compilation chain clearly, you have

high-level source -> bytecode compiler -> (bytecode transpiler -> bytecode compiler) -> API frontend -> driver compiler -> ISA

My point is: the process of turning shader code into something executable is a whole lot more like content baking and loading than it is like compiling your game’s codebase. Shaders require complex transformations with many contextual dependencies to be usable, and they are generally not part of the everyday code development workflow. In the usual production scenario, shaders only need to be written or updated as art requirements change.

Why is loading shaders such a mess?

To answer this question, we have to examine the stakeholders in the hardware and software industries, and what their vested interests are.

Let’s say you’re Apple. Your entire business model is predicated upon locking your customers into a walled garden. What advantage do you have to gain from creating or supporting a portable shader format? You control every level of your ecosystem, from chip manufacturing all the way to the OS and application level. It’s your way or the highway. All that supporting easily-portable software does for you is allow your customers to switch away from Apple devices more easily.

Microsoft is a similar story, at least when it comes to Xbox. The only API they allow you to use is D3D12. Why would they support anything else? They control the hardware and drivers completely. Fascinatingly, the only manufacturer that has embraced open standards is … Nintendo, which supports Vulkan on Switch. I have no idea why they decided to do that, but I’m certainly not complaining.

When it comes to GPU manufacturers the story isn’t much different. To their credit, the GPU manufacturers do contribute to open standards when it comes to the API level (Vulkan was originally an AMD research project called Mantle that was donated to the Khronos Group). But there is little hope of a common shader ISA ever coming to fruition. According to the latest Steam Hardware Survey, Nvidia controls 75% of the market share for GPUs. Collaborating on a standardized ISA with other manufacturers would just slow them down and allow competitors to gain insight into their architecture development processes.

Ultimately, there is no economic incentive for these actors to cooperate with each other. The cost of all this fragmentation just falls on developers who want their programs to be able to run on different machines without too much trouble. C’est la vie.

The shader language question

Wouldn’t a portable high-level shader language solve these problems? I understand the appeal of this approach. It would mean that at the API level the client wouldn’t have to worry about all these different formats. They could just write shader code, pass it to the API, and it would Just Work. We could even provide an up-front way to query shader resource usage. It all seems so simple!

First of all, I’m not sure that this approach actually addresses the root problem. As I have made clear earlier, we have to translate code into something that can actually run on a variety of graphics devices. This is not exactly something you can hack out over the course of a long weekend.

The bigger issue is this: Why should it be the job of a small overworked group of open-source developers to solve a problem that the entire industry both created and lacks the motivation to solve? At the point where we are seriously considering that our only reasonable solution is to design and maintain not only an entire programming language, but also a bytecode format and a translation system that converts that bytecode format to the ones that can actually be loaded by drivers, I think we have lost the plot completely. The fundamental problem here is that no standardized shader ISA or even bytecode exists, and there is no material incentive for any vendor to create or agree upon one. The question is one of fragmentation, and fragmentation is extremely hard to address at the level where we would be capable of addressing it.

The problem we want to solve with our SDL GPU proposal is that graphics APIs are fragmented to a degree that makes it highly difficult to write portable hardware-accelerated applications. Addressing that fragmentation at the code calling level was challenging enough - addressing fragmentation at the code generation level is an order of magnitude more complex than what we’ve already accomplished.

It’s difficult to overstate just how complex taking on this task would be. The adoption of a custom shader language delayed WebGPU, a W3C proposal involving some of the most powerful tech corporations in the world with full-time staff dedicated to it, by several years. WebGPU still isn’t done yet. (It might never be.)

Furthermore, portable high level shader languages already exist. Consider HLSL: it’s extremely widely adopted and can compile to DXBC, DXIL, and SPIR-V, which means that it can be used (with the help of SPIRV-Cross in the case of Apple platforms) as a source language for any currently available desktop graphics API. It’s not really clear that we could materially improve over what already exists, and certainly not in a short amount of time.

I don’t want it to seem like I’m against attempting a high-level approach. It would be great to have a library with a batteries-included solution that works for 95% of use cases. I just think that forcing a high-level language at our API level delays the project significantly, and maybe even indefinitely, and imposes strong limitations on workflows. Developers are very opinionated about their workflows, and even with the advantages it’s not clear that forcing everyone into using a custom high-level language would go over well. It could be enough to dissuade some people from using the API entirely.

Our approach doesn’t disallow the creation of a portable shader language, but it means that we don’t have to depend on one. Our approach is low maintenance, works right now, and doesn’t lock you into a specific workflow.

Our proposed approach

Here is our shader creation setup as it currently stands:

typedef enum SDL_GpuShaderStage

{

SDL_GPU_SHADERSTAGE_VERTEX,

SDL_GPU_SHADERSTAGE_FRAGMENT

} SDL_GpuShaderStage;

typedef enum SDL_GpuShaderFormat

{

SDL_GPU_SHADERFORMAT_INVALID,

SDL_GPU_SHADERFORMAT_SPIRV, /* Vulkan, any SPIRV-Cross target */

SDL_GPU_SHADERFORMAT_HLSL, /* D3D11, D3D12 */

SDL_GPU_SHADERFORMAT_DXBC, /* D3D11, D3D12 */

SDL_GPU_SHADERFORMAT_DXIL, /* D3D12 */

SDL_GPU_SHADERFORMAT_MSL, /* Metal */

SDL_GPU_SHADERFORMAT_METALLIB, /* Metal */

SDL_GPU_SHADERFORMAT_SECRET /* NDA'd platforms */

} SDL_GpuShaderFormat;

typedef struct SDL_GpuShaderCreateInfo

{

size_t codeSize;

const Uint8 *code;

const char *entryPointName;

SDL_GpuShaderFormat format;

SDL_GpuShaderStage stage;

Uint32 samplerCount;

Uint32 storageTextureCount;

Uint32 storageBufferCount;

Uint32 uniformBufferCount;

} SDL_GpuShaderCreateInfo;

extern SDL_DECLSPEC SDL_GpuShader *SDLCALL SDL_GpuCreateShader(

SDL_GpuDevice *device,

SDL_GpuShaderCreateInfo *shaderCreateInfo);

Because we require that you provide the format alongside your code, this enables any kind of online or offline compilation scheme you could desire. For example, in your build step you could use HLSL to generate SPIR-V, use SPIRV-Reflect to extract resource usage information from the shader, and then pass that data to SDL_GpuCreateShader. You could also use SPIRV-Cross at build time to generate MSL from your SPIR-V output and then load that MSL code for your Apple targets. In the spirit of my declaration that shaders are content and not code, I think that it makes sense to develop a workflow that matches how you like to work with shaders. There are a lot of tradeoffs to consider and there’s no one right answer. If you prefer GLSL to HLSL, go for it. Do what works for your project.

One of the best illustrations of how flexibly this approach can work is our implementation of an SDL GPU backend for FNA3D. FNA is a preservation project, meaning that we do not always have access to source code. When you shipped an XNA game, the shaders came in a binary format called FX bytecode. We have to translate FX bytecode to formats that work on modern graphics APIs, and we accomplish this with a library called Mojoshader. Since Mojoshader can already translate FX bytecode to SPIR-V, and SPIRV-Cross exists, we can use SPIR-V as the source of truth for all the GPU backends. In essence we have an online shader compilation pipeline with FX bytecode as the source, and it works quite nicely:

I think we’ve done our best to arrive at a decent compromise approach considering the situation we’re in. You could argue that having to provide different shader formats for different backends means that the API isn’t truly portable - but a solution that doesn’t exist is the least portable of all.